Engineering | Information releases | Analysis | Know-how

September 21, 2023

A crew led by researchers on the College of Washington has developed a shape-changing good speaker, which makes use of self-deploying microphones to divide rooms into speech zones and observe the positions of particular person audio system. Right here UW doctoral college students Tuochao Chen (foreground), Mengyi Shan, Malek Itani, and Bandhav Veluri — all within the Paul G. Allen College of Pc Science & Engineering — display the system in a gathering room.April Hong/College of Washington

In digital conferences, it’s straightforward to maintain individuals from speaking over one another. Somebody simply hits mute. However for essentially the most half, this skill doesn’t translate simply to recording in-person gatherings. In a bustling cafe, there are not any buttons to silence the desk beside you.

The power to find and management sound — isolating one particular person speaking from a selected location in a crowded room, as an example — has challenged researchers, particularly with out visible cues from cameras.

A crew led by researchers on the College of Washington has developed a shape-changing good speaker, which makes use of self-deploying microphones to divide rooms into speech zones and observe the positions of particular person audio system. With the assistance of the crew’s deep-learning algorithms, the system lets customers mute sure areas or separate simultaneous conversations, even when two adjoining individuals have related voices. Like a fleet of Roombas, every about an inch in diameter, the microphones mechanically deploy from, after which return to, a charging station. This permits the system to be moved between environments and arrange mechanically. In a convention room assembly, as an example, such a system could be deployed as a substitute of a central microphone, permitting higher management of in-room audio.

The crew printed its findings Sept. 21 in Nature Communications.

“If I shut my eyes and there are 10 individuals speaking in a room, I do not know who’s saying what and the place they’re within the room precisely. That’s extraordinarily arduous for the human mind to course of. Till now, it’s additionally been troublesome for expertise,” mentioned co-lead writer Malek Itani, a UW doctoral scholar within the Paul G. Allen College of Pc Science & Engineering. “For the primary time, utilizing what we’re calling a robotic ‘acoustic swarm,’ we’re capable of observe the positions of a number of individuals speaking in a room and separate their speech.”

Earlier analysis on robotic swarms has required utilizing overhead or on-device cameras, projectors or particular surfaces. The UW crew’s system is the primary to precisely distribute a robotic swarm utilizing solely sound.

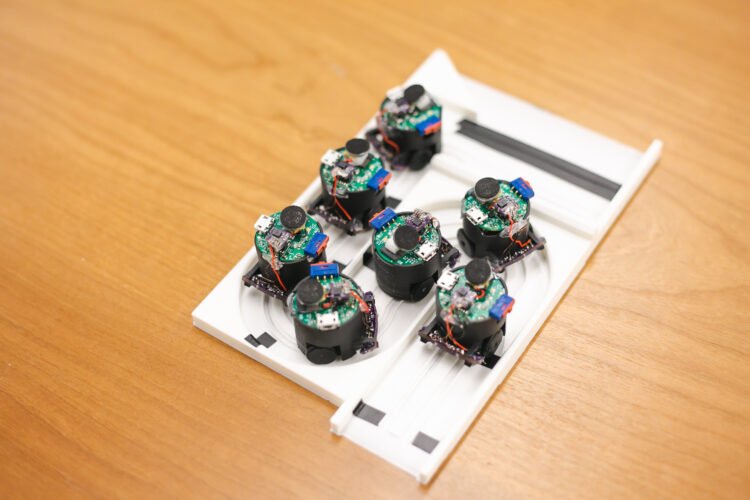

The crew’s prototype consists of seven small robots that unfold themselves throughout tables of assorted sizes. As they transfer from their charger, every robotic emits a excessive frequency sound, like a bat navigating, utilizing this frequency and different sensors to keep away from obstacles and transfer round with out falling off the desk. The automated deployment permits the robots to position themselves for max accuracy, allowing larger sound management than if an individual set them. The robots disperse as removed from one another as doable since larger distances make differentiating and finding individuals talking simpler. Immediately’s client good audio system have a number of microphones, however clustered on the identical system, they’re too shut to permit for this technique’s mute and lively zones.

The tiny particular person microphones are capable of navigate round muddle and place themselves with solely sound.April Hong/College of Washington

“If I’ve one microphone a foot away from me, and one other microphone two ft away, my voice will arrive on the microphone that’s a foot away first. If another person is nearer to the microphone that’s two ft away, their voice will arrive there first,” mentioned co-lead writer Tuochao Chen, a UW doctoral scholar within the Allen College. “We developed neural networks that use these time-delayed indicators to separate what every particular person is saying and observe their positions in an area. So you may have 4 individuals having two conversations and isolate any of the 4 voices and find every of the voices in a room.”

The crew examined the robots in workplaces, residing rooms and kitchens with teams of three to 5 individuals talking. Throughout all these environments, the system may discern totally different voices inside 1.6 ft (50 centimeters) of one another 90% of the time, with out prior details about the variety of audio system. The system was capable of course of three seconds of audio in 1.82 seconds on common — quick sufficient for reside streaming, although a bit too lengthy for real-time communications comparable to video calls.

Because the expertise progresses, researchers say, acoustic swarms could be deployed in good houses to raised differentiate individuals speaking with good audio system. That would doubtlessly enable solely individuals sitting on a sofa, in an “lively zone,” to vocally management a TV, for instance.

To cost, the microphones mechanically return to their charging station.April Hong/College of Washington

Researchers plan to finally make microphone robots that may transfer round rooms, as a substitute of being restricted to tables. The crew can also be investigating whether or not the audio system can emit sounds that enable for real-world mute and lively zones, so individuals in several components of a room can hear totally different audio. The present examine is one other step towards science fiction applied sciences, such because the “cone of silence” in “Get Sensible” and “Dune,” the authors write.

After all, any expertise that evokes comparability to fictional spy instruments will increase questions of privateness. Researchers acknowledge the potential for misuse, so that they have included guards towards this: The microphones navigate with sound, not an onboard digital camera like different related programs. The robots are simply seen and their lights blink after they’re lively. As an alternative of processing the audio within the cloud, as most good audio system do, the acoustic swarms course of all of the audio regionally, as a privateness constraint. And though some individuals’s first ideas could also be about surveillance, the system can be utilized for the other, the crew says.

“It has the potential to truly profit privateness, past what present good audio system enable,” Itani mentioned. “I can say, ‘Don’t document something round my desk,’ and our system will create a bubble 3 ft round me. Nothing on this bubble could be recorded. Or if two teams are talking beside one another and one group is having a personal dialog, whereas the opposite group is recording, one dialog will be in a mute zone, and it’ll stay personal.”

Takuya Yoshioka, a principal analysis supervisor at Microsoft, is a co-author on this paper, and Shyam Gollakota, a professor within the Allen College, is a senior writer. The analysis was funded by a Moore Inventor Fellow award.

For extra data, contact acousticswarm@cs.washington.edu.

Tag(s): School of Engineering • Malek Itani • Paul G. Allen College of Pc Science & Engineering • Shyam Gollakota • Tuochao Chen