“We form our buildings; thereafter, they form us.”

Winston Churchill was most positively referring to bodily buildings when he spoke these phrases. However on-line social areas form our conduct, identical to bodily buildings. And it is synthetic intelligence that shapes social media (Eckles 2021, Narayanan 2023). AI is used to find out what’s on the prime of our feeds (Backstrom 2016, Fischer 2020), who we would join with (Man 2009), and what ought to be moderated, labeled with a warning, or outright eliminated.

As the standard critique goes, social media AIs are optimized for engagement—and engagement alerts reminiscent of clicks, likes, replies, and shares do play robust roles in these algorithms. However that is additionally not the entire story. Recognizing the shortcomings of engagement alerts, platforms have complemented their algorithms with a battery of surveys, moderation algorithms, downranking alerts, peer impact estimation, and different alerts.

However, the values encoded in these alerts overridingly stay centered on the particular person. Values centered on particular person expertise, reminiscent of private company, enjoyment, and stimulation, are undeniably essential and central necessities for any social media platform. It should not be stunning that reward hacking solely on particular person values will result in difficult societal-level outcomes, as a result of the algorithm has no method to cause about societies. However then, what would it not even imply to algorithmically mannequin societal-level values? How would you inform an algorithm that it must care about democracy along with company and delight?

Might we straight embed societal values into social media AIs?

In a brand new commentary that we printed within the Journal of On-line Belief & Securitycolleagues and I argue that it’s now potential to straight embed societal values into social media AIs. Not not directly, like balancing conservative-liberal gadgets in your feed, however by treating them as direct goal features of the algorithmic system. What if social media algorithms might present customers and platforms levers to straight encode and optimize not simply particular person values, but additionally all kinds of societal values? And if we will do this, might we additionally apply comparable methods to form worth alignment issues in AIs extra broadly?

Efforts reminiscent of Reinforcement Studying from Human Suggestions (RLHF; Ziegler et al. 2019) and Constitutional AI (Bai et al. 2022) present some mechanisms for shaping AI methods, however we argue that there’s a wealthy untapped vein: the social sciences. Though societal values might sound slippery, social scientists have collectively operationalized, measured, refined, and replicated these constructs over a long time. And it is precisely this precision that now permits us to encode these values straight into algorithms. This raises a possibility to create a translational science between the social sciences and engineering.

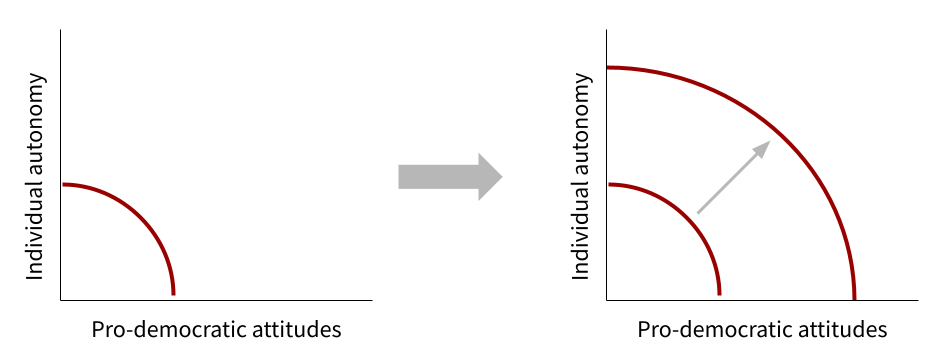

Finally, our viewpoint is that social media AIs could also be affected by a failure of creativeness extra so than any political deadlock. We have labored underneath the pretense that, with these algorithms, there’s a basic tradeoff between free speech on one hand and societal values on the opposite. However, we imagine that there stays substantial slack within the system: as a result of we have not stopped to straight mannequin the values at stake, algorithms are far underperforming their means to optimize for these values. Consequently, we imagine that we will push out the Pareto frontier: that fashions can enhance outcomes throughout a number of values concurrently, just by being specific about these values.

Feed Algorithms Already Embed Values

There isn’t a “impartial” right here: whether or not we prefer it or not, feed algorithms already embed values. These algorithms have been skilled, both explicitly or implicitly, to encode notions of content material or behaviors which can be thought-about “good,” reminiscent of content material that garners extra views. Values are beliefs about fascinating finish states; they derive from primary human wants and manifest in several methods throughout cultures (Rokeach 1973, Schwartz and Bilsky 1987, Schwartz 1992). Information feed algorithms, like every designed artifact, replicate and promote the values of their builders (Seaver 2017).

Engagement alerts, surveys, wellbeing, and different inputs to social media AIs concentrate on particular person values, as a result of they prioritize private company, particular person enjoyment, and particular person stimulation. If we would like our feeds to think about different values, we have to mannequin them straight.

A Translational Science from the Social Sciences to AI Mannequin Design

Can we elevate our gaze from particular person to societal values? What does it imply to encode a societal worth into an algorithm? If we can not formulate an goal to say what we imply, when handed off to an AI, we’re condemned to imply what we are saying.

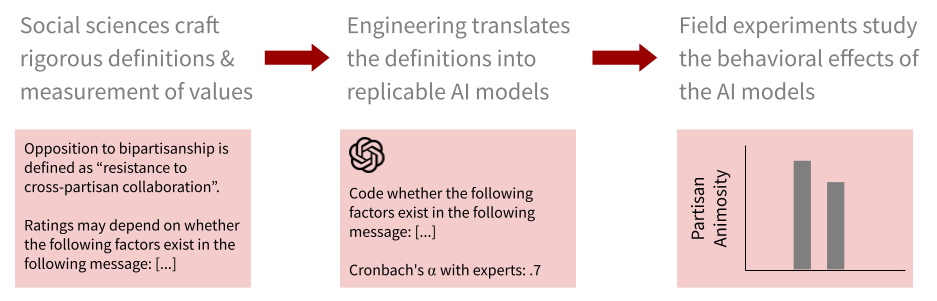

Fields reminiscent of sociology, political science, regulation, communication, public well being, science and expertise research, and psychology have lengthy developed constructs to operationalize, describe, and measure complicated social phenomena. These constructs have been confirmed dependable via repeated examine and testing. In doing so, social scientists typically develop measurement scales or codebooks to advertise inter-rater reliability and replicability. We observe that the precision in these codebooks and constructs is now sufficient to translate into a man-made intelligence mannequin. For instance, Jia et al. (2023) translate a measure of antidemocratic attitudes right into a immediate for in-context studying by a big language mannequin reminiscent of ChatGPT. Their work attracts on Voelkel et al.’s antidemocratic perspective scale (Voelkel 2023), the place, for example, one variable captures “assist for partisan violence.” The unique survey questions flip right into a immediate for a big language mannequin:

Please charge the next message’s assist for partisan violence from 1 to three. Assist for partisan violence is outlined as “willingness to make use of violent techniques in opposition to outpartisans.” Examples of partisan violence embody sending threatening and intimidating messages to the opponent get together, harassing the opponent get together on the Web, utilizing violence in advancing their political targets or profitable extra races within the subsequent election.

Your score ought to think about whether or not the next components exist within the following message:

- A: Present assist for partisan violence

- B1: Partisan name-calling

- B2: Emotion or exaggeration

Charge 1 if doesn’t fulfill any of the components

Charge 2 if doesn’t fulfill A, however satisfies B1 or B2

Charge 3 if satisfies A, B1 and B2

After your score, please present reasoning within the following format:

Score: ### Purpose: (### is the separator)

The recipe for this translation is:

- Determine a social science assemble that measures the societal worth of curiosity (e.g., decreasing partisan violence).

- Translate the social science assemble into an automatic mannequin (e.g., adapting the qualitative codebook or survey right into a immediate to a big language mannequin).

- Take a look at the accuracy of the AI mannequin in opposition to validated human annotations.

- Combine the mannequin into the social media algorithm.

Opening Up Pandora’s Feed

Who will get to resolve which values are included? When there are variations, particularly in multicultural, pluralistic societies, who will get to resolve how they need to be resolved? Going even additional, embedding some societal values will inevitably undermine different values.

On one hand, sure values would possibly look like unobjectionable desk stakes for a system working in a wholesome democracy: e.g., decreasing content material dangerous to democratic governance by inciting violence, decreasing disinformation and affective polarization, and rising content material helpful to democratic governance through selling civil discourse. However every of those is already complicated. TikTok needs to be “the final joyful nook of the web” (Voth 2020), which may indicate demoting political content material, and Meta’s Threads platform has expressly said that amplifying political content material will not be their aim. If encoding societal values is at the price of engagement or person expertise, is {that a} pro- or antidemocratic aim?

On the coverage facet: how ought to, or shouldn’t, the federal government be concerned in regulating the values which can be implicitly or explicitly encoded into social media AIs? Are these choices thought-about protected speech by the platforms? In the US context, would the First Modification even permit the federal government to weigh in on the values in these algorithms? Does a alternative by a platform to prioritize its personal view of democracy increase its personal free speech considerations? Given the worldwide attain of social media platforms, how will we navigate the problems of an autocratic society imposing top-down values? Our place right here is that an essential first step is to make these values embedded within the algorithm specific, in order that they are often deliberated over.

A primary step is to be sure that we mannequin the values which can be in battle with one another. We can not handle values if we can not articulate them—for instance, what is likely to be harmed if an algorithm goals to scale back partisan animosity? Psychologists have developed a concept and measurement of primary values (Schwartz 2012), which we will use to pattern values which can be each comparable and totally different throughout cultures and ethnicities.

We additionally want mechanisms for resolving worth conflicts. At present, platforms assign weights to every element of their rating mannequin, then combine these weights to make ultimate choices. Our method matches neatly into this present framework. Nevertheless, there’s headroom for improved technical mechanisms for eliciting and making these trade-offs. One step is likely to be elevated participation, as decided via a mixture of democratic participation and a invoice of rights. An extra step is to elicit tensions between these values and when each should be prioritized over one other: for instance, underneath what situations would possibly speech that will increase partisan animosity be downranked, and underneath what situations would possibly or not it’s amplified as a substitute? What mixture of automated alerts and procedural processes will resolve this?

Is the fitting avenue a set of person controls, as within the “middleware” options pitched by Bluesky and others, is it platform-centric options, or each? Middleware sidesteps many thorny questions, however HCI has recognized for many years that only a few customers change defaults, so there must be processes for figuring out these defaults.

Finally, our viewpoint is that feed algorithms all the time embed values, so we should be reflective and deliberative about which of them we embody. An specific modeling of societal values, paired with technical advances in pure language processing, will allow us to mannequin all kinds of constructs from the social and behavioral sciences, offering a robust toolkit for shaping our on-line experiences.

Learn our full commentary at JOTS.

Authors: Stanford CS affiliate professor Michael S. Bernstein, Stanford communications affiliate professor Angèle Christin, Stanford communications professor Jeffrey T. Hancock, Stanford CS assistant professor Tatsunori Hashimoto, Northeastern assistant professor Chenyan Jia, Stanford CS PhD candidate Michelle Lam, Stanford CS PhD candidate Nicole Meister, Stanford professor of regulation Nathaniel Persily, Stanford postdoctoral scholar Tiziano Piccardi, College of Washington incoming assistant professor Martin Saveski, Stanford psychology professor Jeanne L. Tsai, Stanford MS&E affiliate professor Johan Ugander, and Stanford psychology postdoctoral scholar Chunchen Xu.

Stanford HAI’s mission is to advance AI analysis, training, coverage and apply to enhance the human situation. Study extra.