× shut

Credit score: College of Texas at San Antonio

Guenevere Chen, an affiliate professor within the UTSA Division of Electrical and Laptop Engineering, lately printed a paper on USENIX Safety 2023 that demonstrates a novel inaudible voice trojan assault to use vulnerabilities of good gadget microphones and voice assistants—like Siri, Google Assistant, Alexa or Amazon’s Echo and Microsoft Cortana—and supply protection mechanisms for customers.

The researchers developed Close to-Ultrasound Inaudible Trojan, or NUIT (French for “nighttime”) to review how hackers exploit audio system and assault voice assistants remotely and silently by means of the web.

Chen, her doctoral scholar Qi Xia, and Shouhuai Xu, a professor in pc science on the College of Colorado Colorado Springs (UCCS), used NUIT to assault various kinds of good units from good telephones to good house units. The outcomes of their demonstrations present that NUIT is efficient in maliciously controlling the voice interfaces of common tech merchandise and that these tech merchandise, regardless of being in the marketplace, have vulnerabilities.

“The technically attention-grabbing factor about this challenge is that the protection answer is easy; nevertheless, so as to get the answer, we should uncover what the assault is first,” mentioned Xu.

The preferred strategy that hackers use to entry units is social engineering, Chen defined. Attackers lure people to put in malicious apps, go to malicious web sites or hearken to malicious audio.

For instance, a person’s good gadget turns into weak as soon as they watch a malicious YouTube video embedded with NUIT audio or video assaults, both on a laptop computer or cellular gadget. Indicators can discreetly assault the microphone on the identical gadget or infiltrate the microphone by way of audio system from different units similar to laptops, car audio methods, and good house units.

“For those who play YouTube in your good TV, that good TV has a speaker, proper? The sound of NUIT malicious instructions will develop into inaudible, and it may possibly assault your mobile phone too and talk together with your Google Assistant or Alexa units. It could possibly even occur in Zooms throughout conferences. If somebody unmutes themselves, they’ll embed the assault sign to hack your telephone that is positioned subsequent to your pc through the assembly,” Chen defined.

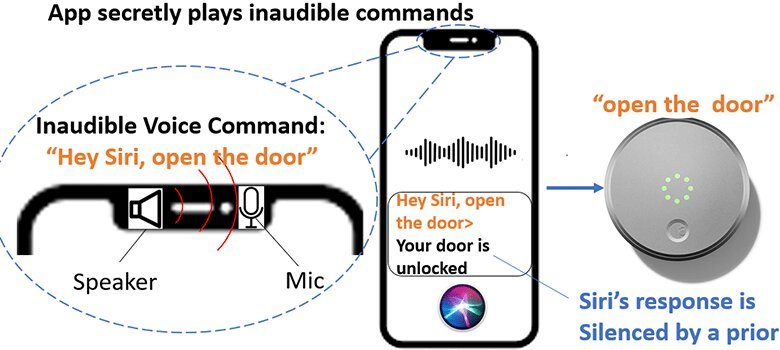

As soon as they’ve unauthorized entry to a tool, hackers can ship inaudible motion instructions to cut back a tool’s quantity and stop a voice assistant’s response from being heard by the consumer earlier than continuing with additional assaults. The speaker should be above a sure noise degree to efficiently enable an assault, Chen famous, whereas to wage a profitable assault in opposition to voice assistant units, the size of malicious instructions should be under 77 milliseconds (or 0.77 seconds).

“This isn’t solely a software program concern or malware. It is a {hardware} assault that makes use of the web. The vulnerability is the nonlinearity of the microphone design, which the producer would wish to handle,” Chen mentioned. “Out of the 17 good units we examined, Apple Siri units must steal the consumer’s voice whereas different voice assistant units can get activated by utilizing any voice or a robotic voice.”

NUIT can silence Siri’s response to attain an unnoticeable assault because the iPhone’s quantity of the response and the quantity of the media are individually managed. With these vulnerabilities recognized, Chen and crew are providing potential traces of protection for shoppers. Consciousness is the perfect protection, the UTSA researcher says. Chen recommends customers authenticate their voice assistants and train warning when they’re clicking hyperlinks and grant microphone permissions.

She additionally advises the usage of earphones in lieu of audio system.

“For those who do not use the speaker to broadcast sound, you are much less more likely to get attacked by NUIT. Utilizing earphones units a limitation the place the sound from earphones is simply too low to transmit to the microphone. If the microphone can’t obtain the inaudible malicious command, the underlying voice assistant cannot be maliciously activated by NUIT,” Chen defined.

Extra info:

USENIX Safety 2023: www.usenix.org/convention/usenixsecurity23